In mathematics, approximation theory is concerned with how functions can best be approximated with simpler functions, and with quantitatively characterizing the errors introduced thereby. Note that what is meant by best and simpler will depend on the application.

A closely related topic is the approximation of functions by generalized Fourier series, that is, approximations based upon summation of a series of terms based upon orthogonal polynomials

Contents

- 1 Optimal polynomials

- 2 Chebyshev approximation

- 3 Remez' algorithm

- 4 Main journals

Optimal polynomials

Once the domain and degree of the polynomial are chosen, the polynomial itself is chosen in such a way as to minimize the worst-case error. That is, the goal is to minimize the maximum value of , where P(x) is the approximating polynomial and f(x) is the actual function. For well-behaved functions, there exists an Nth-degree polynomial that will lead to an error curve that oscillates back and forth between

, where P(x) is the approximating polynomial and f(x) is the actual function. For well-behaved functions, there exists an Nth-degree polynomial that will lead to an error curve that oscillates back and forth between  and

and  a total of N+2 times, giving a worst-case error of

a total of N+2 times, giving a worst-case error of  . It is seen that an Nth-degree polynomial can interpolate N+1 points in a curve. Such a polynomial is always optimal. It is possible to make contrived functions f(x) for which no such polynomial exists, but these occur rarely in practice.

. It is seen that an Nth-degree polynomial can interpolate N+1 points in a curve. Such a polynomial is always optimal. It is possible to make contrived functions f(x) for which no such polynomial exists, but these occur rarely in practice.

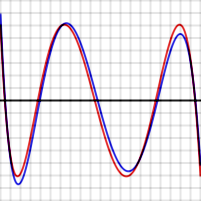

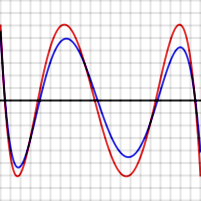

For example the graphs shown to the right show the error in approximating log(x) and exp(x) for N = 4. The red curves, for the optimal polynomial, are level, that is, they oscillate between and

and  exactly. Note that, in each case, the number of extrema is N+2, that is, 6. Two of the extrema are at the end points of the interval, at the left and right edges of the graphs.To prove this is true in general, suppose P is a polynomial of degree N having the property described, that is, it gives rise to an error function that has N + 2 extrema, of alternating signs and equal magnitudes. The red graph to the right shows what this error function might look like for N = 4. Suppose Q(x) (whose error function is shown in blue to the right) is another N-degree polynomial that is a better approximation to f than P. In particular, Q is closer to f than P for each value xi where an extreme of P−f occurs, soWhen a maximum of P−f occurs at xi, thenAnd when a minimum of P−f occurs at xi, thenSo, as can be seen in the graph, [P(x) − f(x)] − [Q(x) − f(x)] must alternate in sign for the N + 2 values of xi. But [P(x) − f(x)] − [Q(x) − f(x)] reduces to P(x) − Q(x) which is a polynomial of degree N. This function changes sign at least N+1 times so, by the Intermediate value theorem, it has N+1 zeroes, which is impossible for a polynomial of degree N.

exactly. Note that, in each case, the number of extrema is N+2, that is, 6. Two of the extrema are at the end points of the interval, at the left and right edges of the graphs.To prove this is true in general, suppose P is a polynomial of degree N having the property described, that is, it gives rise to an error function that has N + 2 extrema, of alternating signs and equal magnitudes. The red graph to the right shows what this error function might look like for N = 4. Suppose Q(x) (whose error function is shown in blue to the right) is another N-degree polynomial that is a better approximation to f than P. In particular, Q is closer to f than P for each value xi where an extreme of P−f occurs, soWhen a maximum of P−f occurs at xi, thenAnd when a minimum of P−f occurs at xi, thenSo, as can be seen in the graph, [P(x) − f(x)] − [Q(x) − f(x)] must alternate in sign for the N + 2 values of xi. But [P(x) − f(x)] − [Q(x) − f(x)] reduces to P(x) − Q(x) which is a polynomial of degree N. This function changes sign at least N+1 times so, by the Intermediate value theorem, it has N+1 zeroes, which is impossible for a polynomial of degree N.Chebyshev approximation

One can obtain polynomials very close to the optimal one by expanding the given function in terms of Chebyshev polynomialsand then cutting off the expansion at the desired degree. This is similar to the Fourier analysis of the function, using the Chebyshev polynomials instead of the usual trigonometric functions.If one calculates the coefficients in the Chebyshev expansion for a function:and then cuts off the series after the term, one gets an Nth-degree polynomial approximating f(x).The reason this polynomial is nearly optimal is that, for functions with rapidly converging power series, if the series is cut off after some term, the total error arising from the cutoff is close to the first term after the cutoff. That is, the first term after the cutoff dominates all later terms. The same is true if the expansion is in terms of Chebyshev polynomials. If a Chebyshev expansion is cut off after

term, one gets an Nth-degree polynomial approximating f(x).The reason this polynomial is nearly optimal is that, for functions with rapidly converging power series, if the series is cut off after some term, the total error arising from the cutoff is close to the first term after the cutoff. That is, the first term after the cutoff dominates all later terms. The same is true if the expansion is in terms of Chebyshev polynomials. If a Chebyshev expansion is cut off after , the error will take a form close to a multiple of

, the error will take a form close to a multiple of  . The Chebyshev polynomials have the property that they are level – they oscillate between +1 and −1 in the interval [−1, 1].

. The Chebyshev polynomials have the property that they are level – they oscillate between +1 and −1 in the interval [−1, 1].  has N+2 level extrema. This means that the error between f(x) and its Chebyshev expansion out to

has N+2 level extrema. This means that the error between f(x) and its Chebyshev expansion out to  is close to a level function with N+2 extrema, so it is close to the optimal Nth-degree polynomial.In the graphs above, note that the blue error function is sometimes better than (inside of) the red function, but sometimes worse, meaning that it is not quite the optimal polynomial. Note also that the discrepancy is less serious for the exp function, which has an extremely rapidly converging power series, than for the log function.Chebyshev approximation is the basis for Clenshaw–Curtis quadrature, a numerical integration technique.

is close to a level function with N+2 extrema, so it is close to the optimal Nth-degree polynomial.In the graphs above, note that the blue error function is sometimes better than (inside of) the red function, but sometimes worse, meaning that it is not quite the optimal polynomial. Note also that the discrepancy is less serious for the exp function, which has an extremely rapidly converging power series, than for the log function.Chebyshev approximation is the basis for Clenshaw–Curtis quadrature, a numerical integration technique.Remez' algorithm

The Remez algorithm (sometimes spelled Remes) is used to produce an optimal polynomial P(x) approximating a given function f(x) over a given interval. It is an iterative algorithm that converges to a polynomial that has an error function with N+2 level extrema. By the theorem above, that polynomial is optimal.Remez' algorithm uses the fact that one can construct an Nth-degree polynomial that leads to level and alternating error values, given N+2 test points.Given N+2 test points ,

,  , ...

, ...  (where

(where  and

and  are presumably the end points of the interval of approximation), these equations need to be solved:The right-hand sides alternate in sign.That is,Since

are presumably the end points of the interval of approximation), these equations need to be solved:The right-hand sides alternate in sign.That is,Since , ...,

, ...,  were given, all of their powers are known, and

were given, all of their powers are known, and  , ...,

, ...,  are also known. That means that the above equations are just N+2 linear equations in the N+2 variables

are also known. That means that the above equations are just N+2 linear equations in the N+2 variables  ,

,  , ...,

, ...,  , and

, and  . Given the test points

. Given the test points  , ...,

, ...,  , one can solve this system to get the polynomial P and the number

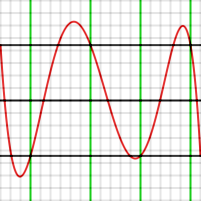

, one can solve this system to get the polynomial P and the number  .The graph below shows an example of this, producing a fourth-degree polynomial approximating

.The graph below shows an example of this, producing a fourth-degree polynomial approximating over [−1, 1]. The test points were set at −1, −0.7, −0.1, +0.4, +0.9, and 1. Those values are shown in green. The resultant value of

over [−1, 1]. The test points were set at −1, −0.7, −0.1, +0.4, +0.9, and 1. Those values are shown in green. The resultant value of  is 4.43 x 10−4Note that the error graph does indeed take on the values

is 4.43 x 10−4Note that the error graph does indeed take on the values at the six test points, including the end points, but that those points are not extrema. If the four interior test points had been extrema (that is, the function P(x)f(x) had maxima or minima there), the polynomial would be optimal.The second step of Remez' algorithm consists of moving the test points to the approximate locations where the error function had its actual local maxima or minima. For example, one can tell from looking at the graph that the point at −0.1 should have been at about −0.28. The way to do this in the algorithm is to use a single round of Newton's method. Since one knows the first and second derivatives of P(x)−f(x), one can calculate approximately how far a test point has to be moved so that the derivative will be zero.

at the six test points, including the end points, but that those points are not extrema. If the four interior test points had been extrema (that is, the function P(x)f(x) had maxima or minima there), the polynomial would be optimal.The second step of Remez' algorithm consists of moving the test points to the approximate locations where the error function had its actual local maxima or minima. For example, one can tell from looking at the graph that the point at −0.1 should have been at about −0.28. The way to do this in the algorithm is to use a single round of Newton's method. Since one knows the first and second derivatives of P(x)−f(x), one can calculate approximately how far a test point has to be moved so that the derivative will be zero.